Here is a list of quick, memorizable descriptions of various unsupervised algorithms that can be used for analysis, dimension reduction, and data cleaning. I put this list together for interview prep for various data science and MLE interviews. Note that there are some sections with TODO: notes that I left for myself. That's because these notes are unfinished, and I hope to come back and update this post with more details.

PCA

Theory

The Covariance Matrix

Let be an dataset and let be the matrix with entries (i.e., the column means repeated over each column). The covariance matrix of is defined to be

This is ultimately due to the identity

where the estimated expectation in the last line is viewing the columns as univariate random variables (so that isn't meant to be a dot product, although the expectation itself is). Thus, the entries of the covariance matrix are the pairwise covariances of the column vectors of .

Eigenvalues of the Covariance Matrix

Now, assume that . All of this is still true otherwise, but the calculations are more annoying. Because is symmetric, it is diagonalizable. Consider the eigendecomposition

where is the matrix whose columns are the eigenvectors of .

Consider the first principal component. By definition, this is the unit vector linear combination of the features which maximizes variance. So if we write

Then the vector maximizes the equation

Now, it is a fact that I looked up on the internet that the -norm of a matrix is equal to the largest singular value of that matrix (why?). It is also a fact that the singular values and eigenvalues coincide for a symmetric matrix (this is a straightforward computation). Thus, the final line in the above equation is equal to the largest eigenvalue of , and therefore the eigenvector of corresponding to this eigenvalue is in fact the first principal component.

The rest of the eigenvectors of are called the other principal components of

Collaborative Filtering

Problem Motivation

Suppose we are building a recommender system for a movie service with an explicit rating system. Users rate movies on a scale of 0 to 5 stars, or something. Imagine we have genres for titles in the form of a -dimensional vector for each movie , as well as known weights for genre preference of users in the form of -dimensional vectors for each user (in both cases, assuming the weights sum to 1). Then it would be easy to predict the potential rating of a movie by a user. The rating would be approximated by 5 times the dot product of and .

Alternating Least Squares

This suggests an optimization problem. If we know the user preference vectors , then learning the movie genre vectors is achieved by minimizing the expression

(where the summands are only for the r_{u,i}x^{(i)}\theta^{(u)}$ is achieved by minimizing the expression

So, the way we find both user and movie genre embeddings, we simply alternate minimizing the above expressions, with some initial random guesses. We can combine the above expressions into one:

We can also just minimize directly using gradient descent. I'm not sure if that's advantageous.

Low Rank Matrix Factorization

Let be the number of users and let be the number of movies, and let be the number of genres (called latent factors in generality). Let be the matrix of ratings, let be the matrix of genre weights for the movies, and let be the matrix of genre preferences for the users. Then

The fact that the matrix is low rank is actually highly important. It says that we don't need a lot of genres. That in fact, the full ratings matrix has a lot of dependencies (similarities between people and between movies, and also linear dependencies between people's preferences for various genres). We can rely on this low rank assumption when designing algorithms for recommender systems.

Other models I did not get a chance to write up

TODO: Write these up some time

- Singular Value Decomposition

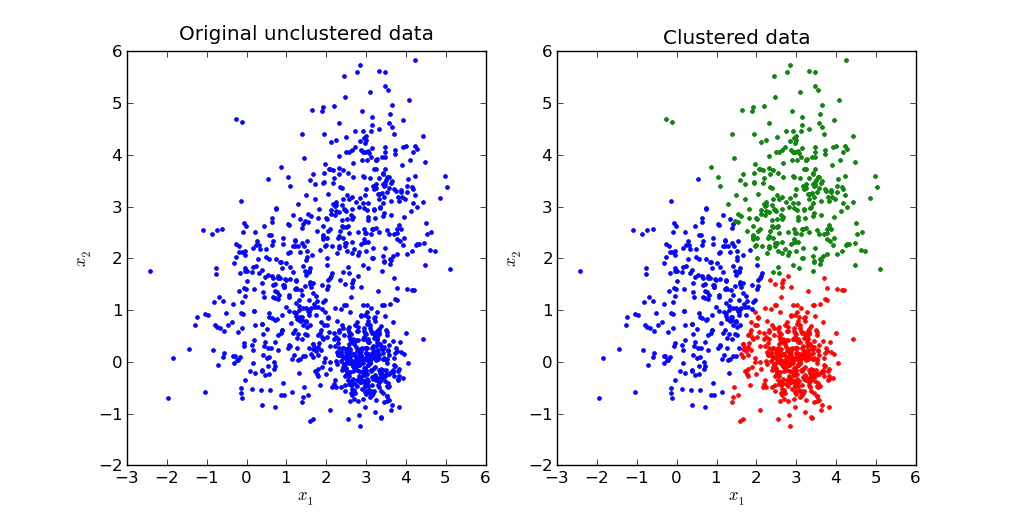

- K Means

- Robust Covariance

- One Clas SVM

- Isolation Forest