This begins a series of notes that I took while studying for a stats class in 2019 on edX here. These notes were incomplete, so take everything with a grain of salt. I was simply trying to understand hypothesis testing from a theoretical point of view.

Modes of Convergence

Definitions

-

The strongest mode of convergence is actually weaker than pointwise. We say a sequence of random variables converges almost surely to and write if

i.e., the set of points for which the sequence does not converge is negligible.

-

Another concept of convergence is convergence in quatratic mean, which is simply convergence in the probability space.

-

We say a sequence of random variables converges in probability to and write if for all ,

where here convergence is that of real numbers. Both almost sure convergence and convergence imply convergence in probability.

-

We say in distribution if the sequence of CDF functions converges pointwise to . Convergence in probability implies convergence in distribution. The converse is true when is a point mass.

-

We briefly talk about uniform convergence later in the class, which is simply convergence in the space.

Limits of Sequences of Random Variables

-

Law of Large Numbers: Let be an iid sequence of random variables with finite mean . Then in probability. Or maybe only in distribution. I think in most well behaved examples, the convergence is in probability. There is also a strong law of large numbers, which asserts almost sure convergence, but I have not used it in the class and don't remember the conditions.

-

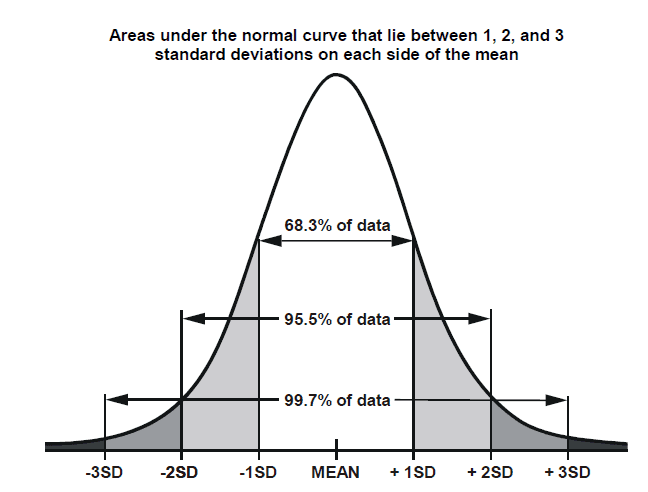

Central Limit Theorem: Let be an iid sequence of random variables with finite mean and finite variance . Then

where convergence is in distribution. It is important to note that CLT holds for multivariate distributions as well, with the obvious generalization to a multivariate Gaussian limit.

The Delta Method

-

The Delta Method: Let be a sequence of -dimensional random vectors and suppose

Then for any continuously differentiable function , we have

-

The most obvious application of the Delta method is in parameter estimation for distributions whose parameter is something other than a simple mean (e.g., exponential distribution). We will see the Delta method again when we derive the maximum likelihood estimator.