Adding some notes I took on recommender systems while working at Vizio. This is not proprietary knowledge, and is just a summary of some metrics introduced in a paper linked below.

Notation

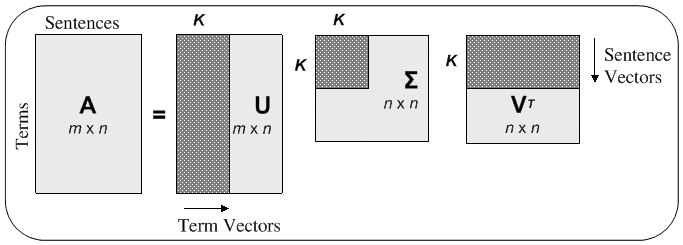

We are primarily concerned with implicit feedback recommender systems, and those based on matrix factorization methods (ALS, SVD, etc.). In this family of algorithms, the goal is to factor the feedback matrix into a product of two rectangular matrices of latent factor representations.

Notation for Explicit Feedback Models

More precisely, Let be the number of users, let be the number of movies, and let be the number of latent factors. Let be the feedback matrix, so that , where is some feedback signal about the preference of user for title . Let be the matrix of genre weights for the movies, and let be the matrix of genre preferences for the users. Then

Implicit Feedback

The above is the traditional setting of explicit feedback, where is something like a rating (thumbs up/down, or a 0 to 5 score). In this setting, the feedback matrix represents preference. In the implicit feedback setting, is usually based on consumption (number of views, for example). In this case, the feedback matrix represents confidence about titles. As such, there is no notion of negative feedback. One way to deal with this, outlined in the Hu, Koren, Volinsky paper, is the use of a preference signal

and a confidence signal

The confidence signal is used as a term weighting in the alternating least squares formula, while the feedback matrix is replaced with the preference matrix .

Metrics

Recall (haha) that precision is the ratio of true positives to reported positives. In the language of recommender systems, this is the proportion of recommendations that are actually relevant. Similarly, recall is the proportion of relevant titles that are recommended.

We define relevance in terms of : if , title is relevant for user , otherwise it is not. We can define recommendations a couple of different ways. If we form predictions , and rank titles based on the (floating point) values of these predictions, then recommendations could either be the top titles based on this ranking, or the top , excluding those below a predefined relevance threshold. Either way, we have a well-defined notion for true and false positives and negatives.

MAP@

For any user and any positive integer , define

Then we can define average precision at for any user as

We define mean average precision at to be the mean of AP@ over all users.

Why MAR@ Is Not Very Useful

We can adapt the above formulas for a recall-oriented metric instead, by defining AR@ to be the average recall seen at , as ranges from 1 to . In this case, the formula becomes

where is the total number of positives (i.e., the total number of relevant titles).

The first issue is that because recall depends more on the titles not recommended than on the titles recommended (i.e., it is dominated by the denominator if there are a lot of titles watched), the above equation is dominated by the final term in the sum. Thus, there is not really a lot of information gained over using just averaging recall at over all users:

The second issue with this metric is that the scale is no longer intuitive. To see this, consider the best possible case: where all recommended titles are relevant. Then we would have

which is not equal to 1 in general, and will be a very small number most of the time.

Expected Percentile Ranking

The Hu et al paper used expected percentile ranking as one of their main metrics for comparing recommender systems. It is easy enough to define. Let denote the percentile rank of title for user , i.e., the rank of the recommendation (in terms of relevance) divided by the number of titles recommended. Then

where denotes relevance calculated during the test period.

Thus, expected percentile rank can be thought of as a weighted average of percentile rank by consumption, so the range is and lower is better.

Why this tells a different story than precision

is a normalized weighted sum of rank by relevance. Thus, it is higher if we are omitting heavily viewed titles from our recommendations, even if precision is high. From this point of view, expected ranking can be thought of as a recall-oriented metric.

Empirical CDF of percentile ranking

The authors also look at the distribution of percentile rankings by plotting the CDF and comparing models. This seems fairly qualitative, unless we do AUC or something.