I was studying reinforcement learning a while ago, attempting to educate myself about deep Q learning. As part of that

effort, I read through the first few chapters of

Reinforcement Learning: An A Introduction by Sutton and Barto. Here

are my notes on Chapter 3. Like all of my other notes, these were never intended to be shared, so apologies in advance

if they make no sense to anyone.

Chapter 3

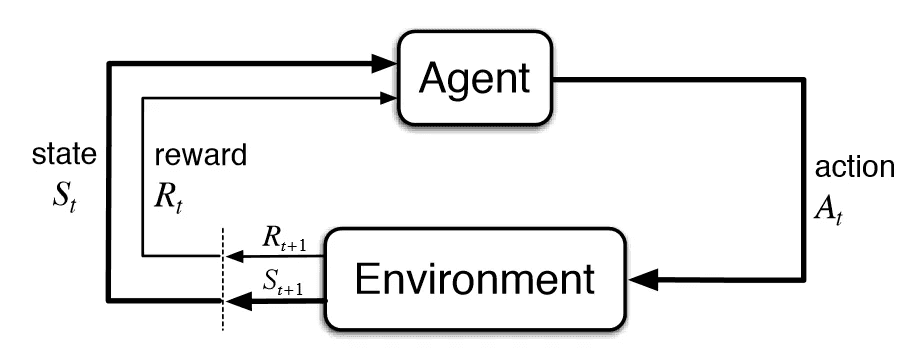

Agent-Environment Interface

They mainly cover basic terminology here. The main difference from bandit

problems is that the state can change with each action.

-

S = state space, A = action space, R =

reward space. These are all finite, with R⊆R.

-

As with the bandit problems, we have At, Rt are the actions and rewards

at time t. The book uses Rt+1 to denote the reward given for action

At. MDPs introduce another time series, St to denote the state at

time t. Thus St and At "go together" and St+1 and Rt+1

are "jointly determined."

-

The probability distributions governing the dynamics of an MDP are given by

the density function:

p(s′,r∣s,a):=P(St+1=s′,Rt+1=r∣St=s,At=a)

Other useful equations are:

p(s′∣s,a)=r∈R∑p(s′,r∣s,a),r(s,a):=E[Rt∣St−1=s,At−1=a]=r∈R∑s∈S∑p(s′,r∣s,a)r(s,a,s′):=E[Rt∣St−1=s,At−1=a,St=s′]=r∈R∑r⋅p(s′∣s,a)p(s′,r∣s,a)

Goals and Rewards

Note that as with bandit problems, Rt is stochastic. But this is also the

only thing we can really tune about a given system. In pactice, reward is based

on the full state-action-state transition, and therefore the randomness comes

from the environment.

Key insight: keep rewards simple with small, finite support. For some reason, I

think of this as an extension of defining really simple prior distributions.

Since in this case, the value (return) is determined by percolating rewards

backwards from terminal states.

Returns and Episodes

Define a new random variable Gt to be the return at time t. So if an agent

interacts for T time steps, this would be defined

Gt=Rt+1+Rt+2+⋯+RT.

Unified Notation for Episodic and Continuing Tasks

Here, the book allows T to be infinite. In this ncase, we need a discounting

factor for future returns, or otherwise the return would be a potentially

divergent series. Let γ be the discount factor (possibly equal to 1 for

finite episodes), so that

Gt:=k=0∑∞γkRt+k+1=Rt+1+γGt+1.

This unified notation is defined after discussing terminal states, which help

to deal with the problem of finite episodes. A terminal state is a sink in the

state-action graph, whose reward is always zero. This allows us to always use

infinite sums even for finite episodes.

Policies and Value Functions

A policy is a conditional distribution over actions, conditioned on a state:

Pπ(a∣s):=π(a,s).

The value of a state is the expected return, with respect to the policy

distribution:

vπ(s):=Eπ[Gt∣St=s].

The quality of an action a at state s is the expected return

qπ(s,a):=Eπ[Gt∣St=s,At=a].

We call qπ the action-value function.

Exercise 3.12

Give an equation for vπ in terms of qπ and π.

Solution:

vπ(s)=Eπ[Gt∣St=s]=a∈A∑Eπ[Gt∣At=a,St=s]⋅P[At=a∣St=s]=a∈A∑qπ(s,a)⋅π(s,a).

Exercise 3.13

Give an equation for qπ in terms of vπ and the four-argument p.

Solution:

qπ(s,a)=Eπ[Gt∣St=s,At=a]=Eπ[Rt+1+γGt+1∣St=s,At=a]=s′,r∑p(s′,r∣s,a)⋅[r+γEπ[Gt+1∣St+1=s′,At=a]]=s′,r∑p(s′,r∣s,a)⋅[r+γEπ[Gt+1∣St+1=s′]]=s′,r∑p(s′,r∣s,a)⋅[r+γ⋅vπ(s′)]

The fourth line follows from the third because of the Markov property.